Deepfake and The Ethics of AI in Video Editing 2023

Got no time to read…We got you, watch or listen to the video below

The emergence of deepfake video technology has raised many ethical questions about using Artificial Intelligence to create synthetic media. As deepfakes become more sophisticated, they have the potential to wreak havoc through the spread of misinformation and infringement on personal rights. However, they also create new opportunities for creativity and self-expression. This complex issue requires nuanced ethical guidelines to promote responsible innovation in AI media creation.

What are Deep Fakes?

Deepfakes are videos AI manipulates to replace one person’s likeness or voice with another’s. The technology uses machine learning algorithms to analyze different video sources’ facial expressions and speech patterns. The algorithms then blend these inputs to create convincing synthetic footage.

Early deepfake videos on Reddit gained notoriety by face-swapping celebrities into pornographic videos without consent. Since then, technology has advanced rapidly, lowering barriers to creating videos that falsely depict public figures or private individuals saying or doing things they never actually did.

The Liar’s Dividend: Disinformation and Plausible Deniability

One primary ethical concern around deepfake videos is their potential to spread disinformation through increasingly realistic fabricated videos, also known as synthetic media. The core issue is that deepfakes provide plausible deniability to those responsible for distributing deceptive content.

Even if a damaging fake video is debunked, the seeds of doubt have been planted in viewers’ minds. This “liar’s dividend” benefits purveyors of misinformation who can dismiss confirmed authentic footage as potential deepfakes.

Powerful actors could exploit this to discredit opponents or exonerate themselves from controversies. The possibility of tampering casts doubt on all media, undermining public discourse.

Reputational Harm and Nonconsensual Pornography

Most notoriously, deepfake technology has been used to create nonconsensual intimate imagery of celebrities and ordinary citizens. Face-swapped pornographic videos inflict reputational damage and psychological trauma on the person portrayed.

The same techniques that enable nonconsensual deepfakes also facilitate revenge porn, exacerbating harassment issues. These unethical applications demand consideration of personal rights and consent when developing policies around synthetic media.

Copyright Infringement and Intellectual Property

In addition to reputational harm, the unauthorized use of someone’s likeness in deepfakes raises legal issues around intellectual property. Altering copyrighted material through AI editing likely constitutes fair use legally, but the ethical implications are less clear.

Should historical figures or deceased celebrities have a say in how their images are manipulated or portrayed? Do living public figures have more claim over their representation than private individuals do? These questions intersect with AI ethics and rights.

Deepfake Pornography: Free Speech vs. Harm

A debate exists on whether generating nonconsensual intimate media should be protected as free speech or banned to prevent harm. Some defend deep fake porn access on libertarian grounds, while critics argue it violates personal autonomy and dignity.

Since pornography fundamentally differs from political satire or art, policymakers face challenges balancing freedom of expression with preventing the exploitation of vulnerable groups. As deepfakes become more accessible, this issue grows more urgent.

Algorithmic Bias and Representation

Like all AI systems, deepfake video algorithms inherit and amplify existing biases from their training data. For instance, they better replicate certain skin tones or facial structures over others. Uneven representation flows downstream when synthetic media creation excludes particular groups.

To ensure fair and ethical use of the technology, developers should audit for biases and work to improve model inclusivity. Community media-sharing standards should also discourage the deceptive overrepresentation or underrepresentation of marginalized identities.

Deepfakes in Entertainment: New Possibilities for Storytelling

Despite ethical perils, deepfake technology creates new possibilities for creativity and entertainment. Visual media productions could use synthetic footage respectfully to build more imaginative worlds for fictional stories.

Filmmakers have long used similar techniques like compositing and rotoscoping to place actors in fantastical scenarios. When used consensually and transparently, AI editing tools like deepfakes have the potential to push creative boundaries.

Augmented Reality and Resurrecting the Past

Looking further ahead, sophisticated AI media synthesis may eventually give rise to augmented or virtual reality experiences that seamlessly blend actual and simulated footage.

Deep learning could also resurrect historical figures in simulations to interact with modern audiences. While those raise philosophical questions on reality and consciousness, they represent creative frontiers.

The Democratization of Media Production

Currently, creating convincing deepfakes requires extensive technical skills and computing power. However, consumer-grade applications are rapidly improving through open-source tools like FakeApp and more accessible cloud platforms from tech companies.

This trend toward democratized synthetic media production could empower everyday creators to become media manipulators for positive or negative ends. Responsible stewardship requires planning for the ramifications of these capabilities spreading widely.

The Need for Media Literacy

As synthetic media proliferates, developing media literacy will be critical for citizens to understand how to interpret online content responsibly. Education on deepfakes can provide mental self-defense against disinformation.

Fact-checking and provenance-tracing tools are also emerging to help validate media authenticity. Ultimately, critical thinking is the best safeguard against deception in a world awash with advanced AI creations.

The Role of Social Media Platforms

Social networks like Facebook and Twitter provide fertile ground for deepfakes to go viral through shares and links. These platforms face pressure to flag or remove AI-altered media, especially deepfakes featuring private individuals without consent. Here are a few ways social media platforms can play a role in addressing the ethical implications of deepfakes:

Create robust policies with clear guidelines regarding the use of deepfake content. This includes outlining what’s considered acceptable or malicious.

Deploy technology designed to investigate AI and machine learning technologies that can quickly detect and flag deepfake content. They can also collaborate with academic institutions and research bodies to stay updated on the latest deepfake technology and countermeasures.

Set up Reporting and Moderation Mechanisms so users can report suspected deepfake content easily. A swift response team will be needed to investigate and remove false or harmful content.

Implement Ethical Monetization and Advertising Policies to ensure that content creators using deepfakes for malicious purposes cannot monetize their content on the platform. They can also Scrutinize advertisements to avoid promoting or propagating harmful deepfake content.

Educating Users by running awareness campaigns to inform users on the existence of deepfakes and how to spot them. They can also share tools and resources that allow users to scrutinize and validate content.

Through a proactive, transparent, and responsible approach, social media platforms can serve as strong bulwarks against the potentially harmful impact of deepfakes.

Legal Rights to Your Digital Identity

The rise of synthetic media raises the question of whether people should have explicit legal rights to control the use of their digital identities. Some countries already recognize personality rights that protect individuals’ likenesses from exploitation without consent.

More comprehensive protections around biometric privacy, data ownership, and simulating individuals without approval may emerge to address ethical gaps that AI creations like deepfakes expose in current laws.

Ethical Codes and Industry Standards

Until strong regulations appear, self-governance through voluntary professional codes of ethics offers a starting point to guide synthetic media development responsibly. Research groups like the Partnership on AI study best practices for cultivating AI that respects human values.

Industry standards around transparency and consent in media creation, like ethical labeling of AI-edited footage, could also address some concerns preemptively before unethical applications arise.

Ongoing Vigilance in an Evolving Landscape

AI-based media manipulation is a constantly moving target. While deepfakes are the most prominent issue today, new techniques regularly emerge, demanding updated responses. Maintaining ethical norms around synthetic media requires sustained effort and nuanced deliberation.

By taking a technology-neutral approach focused on outcomes like consent, harm, and misinformation, policies and norms can flexibly adapt to future innovations. We must continue deliberating ethics as AI capabilities scale towards generating fully customizable parallel realities.

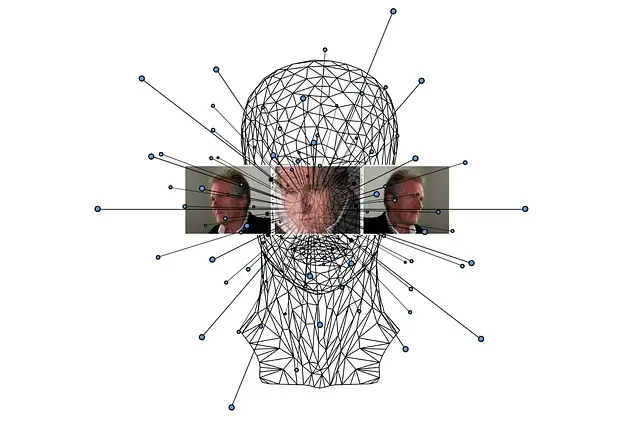

Advancing Deepfake Detection

Advancing deepfake detection technology is crucial in addressing ethical concerns related to deepfakes. Detecting and mitigating the spread of manipulated content can help safeguard personal privacy, prevent misinformation, and uphold the integrity of digital mediaDeepfake detection involves using advanced technology, like machine learning, to identify manipulated videos and images.

Algorithms analyze visual and audio cues to spot anomalies inconsistent with reality. These cues include discrepancies in facial expressions, blinking patterns, shadows, and even subtle muscle movements. By comparing these patterns with a database of genuine content, the algorithm can determine if the media is authentic or a deepfake.

However, deepfake detection isn’t foolproof. Creators are constantly improving their techniques, leading to a cat-and-mouse game. Detection tools might also generate false positives or negatives. Despite challenges, advancing detection technology is essential to combat the ethical concerns surrounding deepfakes, preserving trust, and preventing the harmful impact of manipulated content in the digital age.

Conclusion

Deepfakes and synthetic media activate complex debates on free expression, personal rights, and the nature of truth itself. While these technologies have disturbing implications, they also create opportunities for creativity that should not be overlooked. Constructive policies to mitigate harm require perspectives from law, philosophy, technology, and beyond. If stakeholders work together responsibly, AI-powered media could expand communication and representation in our social fabric. But without foresight and cooperation, these same technologies could unravel trust in our digitally-connected world.

For more AI and digital media posts, you can check out our blog posts on The 7 Best AI-Powered Editing Tools You Should Try and AI Art Generation: Why the Controversy? 2023.